EECS 224: Large Language Models

This is a new course of UC Merced starting at Spring 2025. In this course, students will learn the fundamentals about the modeling, theory, ethics, and systems aspects of large language models, as well as gain hands-on experience working with them.

The Goal of This Course

Offering useful, fundemental, detailed LLM knowledge to students.

Coursework

- In-Course Question Answering

- Final Projects

In-Course Question Answering

In each class, students will be asked several questions. Each student can only answer each question one time. The student who correctly answers a question for the first time and explain the answer clearly to all the classmates will be granted 1 credit. The final scores will be calculated based on the accumulated credits throughout the whole semester. This score does not contribute to the final grading. In the final course, the students who get high scores in question answering will be highlighted as a reputation.

Final Projects

Every student should complete a final project related to LLMs and present it in the final classes. A project report should be completed before the presentation and submitted to Final Project Google Form The file should be named as “Your Name.pdf” The format should follow the 2025 ACL long paper template. Good examples are at Project Report Examples

The final project scores will be calculated through 70% Instructor’s rating + 30% Classmates’ rating. After all the presentations, every student will be asked to choose 3 best projects. The classmates’ ratings will be based on the ratings of all the classmates.

Useful Links

Lecture 1: Overview of LLMs

What is Language?

Language is a systematic means of communicating ideas or feelings using conventionalized signs, sounds, gestures, or marks.

More than 7,000 languages are spoken around the world today, shaping how we describe and perceive the world around us. Source: https://www.snexplores.org/article/lets-learn-about-the-science-of-language

Text in Language

Text represents the written form of language, converting speech and meaning into visual symbols. Key aspects include:

Basic Units of Text

Text can be broken down into hierarchical units:

- Characters: The smallest meaningful units in writing systems

- Words: Combinations of characters that carry meaning

- Sentences: Groups of words expressing complete thoughts

- Paragraphs: Collections of related sentences

- Documents: Complete texts serving a specific purpose

Text Properties

Text demonstrates several key properties:

- Linearity: Written symbols appear in sequence

- Discreteness: Clear boundaries between units

- Conventionality: Agreed-upon meanings within a language community

- Structure: Follows grammatical and syntactic rules

- Context: Meaning often depends on surrounding text

Question 1: Could you give some examples in English that a word has two different meanings across two sentences?

Based on the above properties shared by different langauges, the NLP researchers develop a unified Machine Learning technique to model language data – Large Language Models. Let’s start to learn this unfied language modeling technique.

What is a Language Model?

Mathematical Definition

A language model is fundamentally a probability distribution over sequences of words or tokens. Mathematically, it can be expressed as:

\[P(w_1, w_2, ..., w_n) = \prod_i P(w_i|w_1, ..., w_{i-1})\]where:

- \(w_1, w_2, ..., w_n\) represents a sequence of words or tokens

-

The conditional probability of word \(w_i\) given all previous words is:

\[P(w_i|w_1, ..., w_{i-1})\]

For practical implementation, this often takes the form:

\[P(w_t|context) = \text{softmax}(h(context) \cdot W)\]where:

- Target word: \(w_t\)

- Context encoding function: \(h(context)\)

- Weight matrix: \(W\)

- softmax normalizes the output into probabilities

Example 1: Sentence Probability Calculation

Consider the sentence: “I love chocolate.”

The language model predicts the following probabilities:

- \[P(\text{'I'}) = 0.2\]

- \[P(\text{'love'}|\text{'I'}) = 0.4\]

- \[P(\text{'chocolate'}|\text{'I love'}) = 0.5\]

The total probability of the sentence is calculated as:

\(P(\text{'I love chocolate'}) = P(\text{'I'}) \cdot P(\text{'love'}|\text{'I'}) \cdot P(\text{'chocolate'}|\text{'I love'})\)

\(P(\text{'I love chocolate'}) = 0.2 \cdot 0.4 \cdot 0.5 = 0.04\)

Thus, the probability of the sentence “I love chocolate” is 0.04.

Example 2: Dialogue Probability Calculation

For the dialogue:

A: “Hello, how are you?”

B: “I’m fine, thank you.”

The model provides the following probabilities:

- Speaker A’s Sentence:

- \[P(\text{'Hello'}) = 0.3\]

- \[P(\text{','}|\text{'Hello'}) = 0.8\]

- \[P(\text{'how'}|\text{'Hello ,'}) = 0.5\]

- \[P(\text{'are'}|\text{'Hello , how'}) = 0.6\]

- \[P(\text{'you'}|\text{'Hello , how are'}) = 0.7\]

- Speaker B’s Sentence:

- \[P(\text{'I'}) = 0.4\]

- \[P(\text{'m'}|\text{'I'}) = 0.5\]

- \[P(\text{'fine'}|\text{'I m'}) = 0.6\]

- \[P(\text{','}|\text{'I m fine'}) = 0.7\]

- \[P(\text{'thank'}|\text{'I m fine ,'}) = 0.8\]

- \[P(\text{'you'}|\text{'I m fine , thank'}) = 0.9\]

- Total Probability for the Dialogue:

Combine the probabilities for both sentences:

\(P(\text{'Hello, how are you? I\'m fine, thank you.'}) = P(\text{'Hello, how are you?'}) \cdot P(\text{'I\'m fine, thank you.'})\)

\(P(\text{'Hello, how are you? I\'m fine, thank you.'}) = 0.0504 \cdot 0.06048 = 0.003048192\)

Thus, the total probability of the dialogue is approximately 0.00305.

Example 3: Partial Sentence Generation

Consider the sentence: “The dog barked loudly.”

The probabilities assigned by the language model are:

- \[P(\text{'The'}) = 0.25\]

- \[P(\text{'dog'}|\text{'The'}) = 0.4\]

- \[P(\text{'barked'}|\text{'The dog'}) = 0.5\]

- \[P(\text{'loudly'}|\text{'The dog barked'}) = 0.6\]

Question 2: Calculate the total probability of the sentence \(P(\text{'The dog barked loudly'})\) using the given probabilities.

The Transformer Model: Revolutionizing Language Models

The emergence of the Transformer architecture marked a paradigm shift in how machines process and understand human language. Unlike its predecessors, which struggled with long-range patterns in text, this groundbreaking architecture introduced mechanisms that revolutionized natural language processing (NLP).

The Building Blocks of Language Understanding

From Text to Machine-Readable Format

Before any sophisticated processing can occur, raw text must be converted into a format that machines can process. This happens in two crucial stages:

- Text Segmentation

The first challenge is breaking down text into meaningful units. Imagine building with LEGO blocks - just as you need individual blocks to create complex structures, language models need discrete pieces of text to work with. These pieces, called tokens, might be:

- Complete words

- Parts of words

- Individual characters

- Special symbols

For instance, the phrase “artificial intelligence” might become [“art”, “ificial”, “intel”, “ligence”], allowing the model to recognize patterns even in unfamiliar words.

- Numerical Representation Once we have our text pieces, each token gets transformed into a numerical vector - essentially a long list of numbers. Think of this as giving each word or piece its own unique mathematical “fingerprint” that captures its meaning and relationships with other words.

Adding Sequential Understanding

One of the most innovative aspects of Transformers is how they handle word order. Rather than treating text like a bag of unrelated words, the architecture adds precise positional information to each token’s representation.

Consider how the meaning changes in these sentences:

- “The cat chased the mouse”

- “The mouse chased the cat”

The words are identical, but their positions completely change the meaning. The Transformer’s positional encoding system ensures this crucial information isn’t lost.

The Heart of the System: Information Processing

Context Through Self-Attention

The true magic of Transformers lies in their attention mechanism. Unlike humans who must read text sequentially, Transformers can simultaneously analyze relationships between all words in a text. This is similar to how you might solve a complex puzzle:

- First, you look at all the pieces simultaneously

- Then, you identify which pieces are most likely to connect

- Finally, you use these relationships to build the complete picture

In language, this means the model can:

- Resolve pronouns (“She picked up her book” - who is “her” referring to?)

- Understand idiomatic expressions (“kicked the bucket” means something very different from “kicked the ball”)

- Grasp long-distance dependencies (“The keys, which I thought I had left on the kitchen counter yesterday morning, were actually in my coat pocket”)

Real-World Applications and Impact

The Transformer architecture has enabled breakthrough applications in:

- Cross-Language Communication

- Real-time translation systems

- Multilingual document processing

- Content Creation and Analysis

- Automated report generation

- Text summarization

- Content recommendations

- Specialized Industry Applications

- Legal document analysis

- Medical record processing

- Scientific literature review

The Road Ahead

As this architecture continues to evolve, we’re seeing:

- More efficient processing methods

- Better handling of specialized domains

- Improved understanding of contextual nuances

- Enhanced ability to work with multimodal inputs

The Transformer architecture represents more than just a technical advancement - it’s a fundamental shift in how machines can understand and process human language. Its impact continues to grow as researchers and developers find new ways to apply and improve upon its core principles.

The true power of Transformers lies not just in their technical capabilities, but in how they’ve opened new possibilities for human-machine interaction and understanding. As we continue to refine and build upon this architecture, we’re moving closer to systems that can truly understand and engage with human language in all its complexity and nuance.

What are large language models?

Large language models are transformers with billions to trillions of parameters, trained on massive amounts of text data. These models have several distinguishing characteristics:

- Scale: Models contain billions of parameters and are trained on hundreds of billions of tokens

- Architecture: Based on the Transformer architecture with self-attention mechanisms

- Emergent abilities: Complex capabilities that emerge with scale

- Few-shot learning: Ability to adapt to new tasks with few examples

-

Definition: Large Language Models are artificial intelligence systems trained on vast amounts of text data, containing hundreds of billions of parameters. Unlike traditional AI models, they can understand and generate human-like text across a wide range of tasks and domains.

- Scale and Architecture:

- Typically contain >1B parameters (Some exceed 500B)

- Built on Transformer architecture with attention mechanisms

- Require massive computational resources for training

- Examples: GPT-3 (175B), PaLM (540B), LLaMA (65B)

- Key Capabilities:

- Natural language understanding and generation

- Task adaptation without fine-tuning

- Complex reasoning and problem solving

- Knowledge storage and retrieval

- Multi-turn conversation

Historical Evolution

1. Statistical Language Models (SLM) - 1990s

- Core Technology: Used statistical methods to predict next words based on previous context

- Key Features:

- N-gram models (bigram, trigram)

- Markov assumption for word prediction

- Used in early IR and NLP applications

- Limitations:

- Curse of dimensionality

- Data sparsity issues

- Limited context window

- Required smoothing techniques

2. Neural Language Models (NLM) - 2013

- Core Technology: Neural networks for language modeling

- Key Advances:

- Distributed word representations

- Multi-layer perceptron and RNN architectures

- End-to-end learning

- Better feature extraction

- Impact:

- Word2vec and similar embedding models

- Improved generalization

- Reduced need for feature engineering

3. Pre-trained Language Models (PLM) - 2018

- Core Technology: Transformer-based models with pre-training

- Key Innovations:

- BERT and bidirectional context modeling

- GPT and autoregressive modeling

- Transfer learning approach

- Fine-tuning paradigm

- Benefits:

- Context-aware representations

- Better task performance

- Reduced need for task-specific data

- More efficient training

4. Large Language Models (LLM) - 2020+

- Core Technology: Scaled-up Transformer models

- Major Breakthroughs:

- Emergence of new abilities with scale

- Few-shot and zero-shot learning

- General-purpose problem solving

- Human-like interaction capabilities

- Key Examples:

- GPT-3: First demonstration of powerful in-context learning

- ChatGPT: Advanced conversational abilities

- GPT-4: Multimodal capabilities and improved reasoning

- PaLM: Enhanced multilingual and reasoning capabilities

Key Features of LLMs

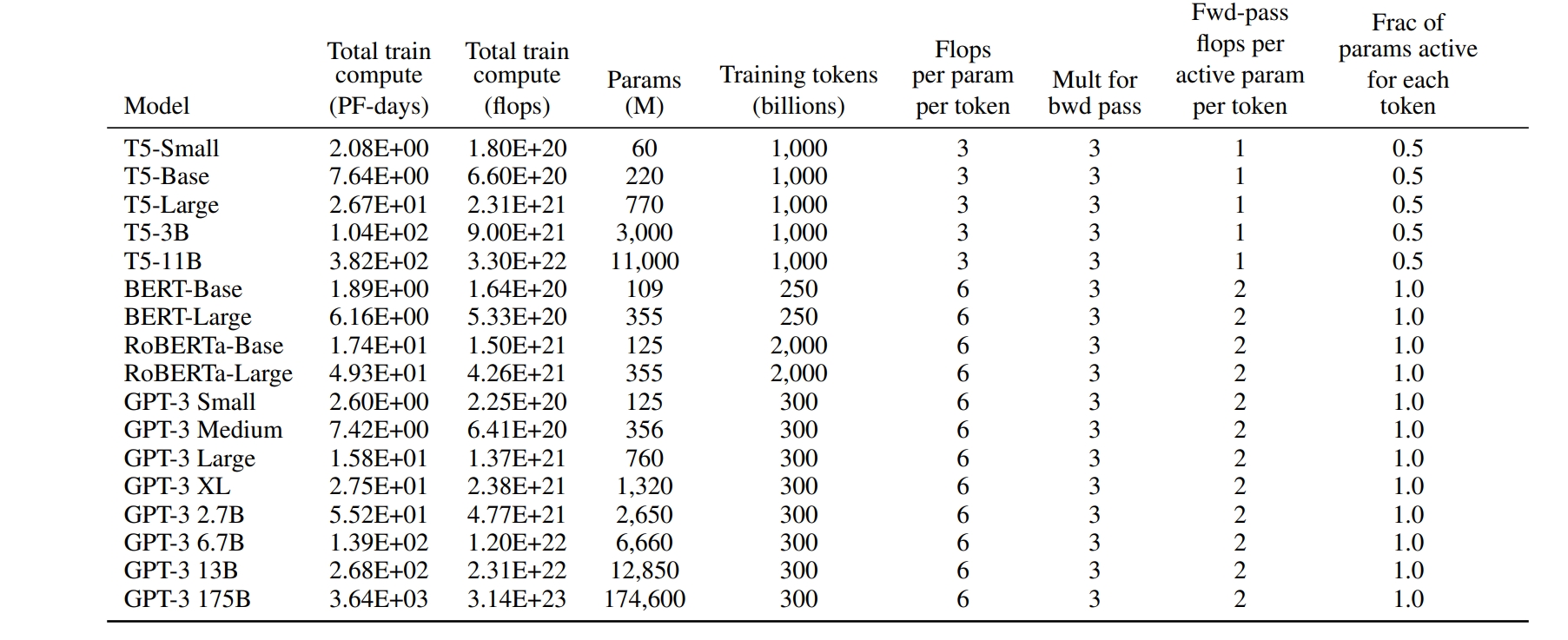

Scaling Laws

- KM Scaling Law (OpenAI):

- Describes relationship between model performance (measured by cross entropy loss $L$) and three factors:

- Model size ($N$)

- Dataset size ($D$)

- Computing power ($C$)

- Mathematical formulations:

- $L(N) = \left(\frac{N_c}{N}\right)^{\alpha_N}$, where $\alpha_N \sim 0.076$, $N_c \sim 8.8 \times 10^{13}$

- $L(D) = \left(\frac{D_c}{D}\right)^{\alpha_D}$, where $\alpha_D \sim 0.095$, $D_c \sim 5.4 \times 10^{13}$

- $L(C) = \left(\frac{C_c}{C}\right)^{\alpha_C}$, where $\alpha_C \sim 0.050$, $C_c \sim 3.1 \times 10^8$

- Predicts diminishing returns as model/data/compute scale increases

- Helps optimize resource allocation for training

- Describes relationship between model performance (measured by cross entropy loss $L$) and three factors:

- Chinchilla Scaling Law (DeepMind):

- Mathematical formulation:

- $L(N,D) = E + \frac{A}{N^\alpha} + \frac{B}{D^\beta}$

- where $E = 1.69$, $A = 406.4$, $B = 410.7$, $\alpha = 0.34$, $\beta = 0.28$

- Optimal compute allocation:

- $N_{opt}(C) = G\left(\frac{C}{6}\right)^a$

- $D_{opt}(C) = G^{-1}\left(\frac{C}{6}\right)^b$

- where $a = \frac{\alpha}{\alpha+\beta}$, $b = \frac{\beta}{\alpha+\beta}$

- Suggests equal scaling of model and data size

- More efficient compute utilization than KM scaling law

- Demonstrated superior performance with smaller models trained on more data

- Mathematical formulation:

Emergent Abilities

- In-context Learning

- Definition: Ability to learn from examples in the prompt

- Characteristics:

- No parameter updates required

- Few-shot and zero-shot capabilities

- Task adaptation through demonstrations

- Emergence Point:

- GPT-3 showed first strong results

Question 3: Design a few-shot prompt that can classify the film topic by the film name. It must be able to correctly classify more than 5 films proposed by other students. Using ChatGPT as the test LLM.

- Instruction Following

- Definition: Ability to understand and execute natural language instructions

- Requirements:

- Instruction tuning

- Multi-task training

- Natural language task descriptions

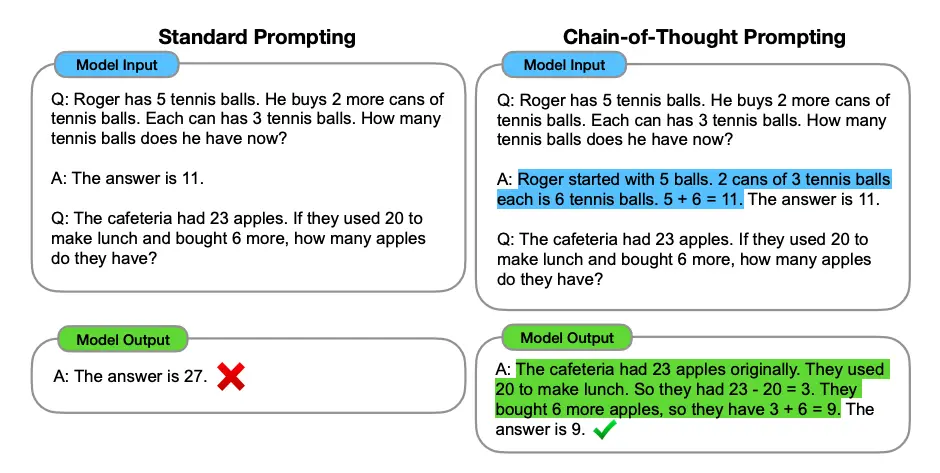

- Step-by-step Reasoning

- Definition: Ability to break down complex problems

- Techniques:

- Chain-of-thought prompting

- Self-consistency methods

- Intermediate step generation

- Benefits:

- Better problem solving

- More reliable answers

- Transparent reasoning process

Technical Elements

Architecture

- Transformer Base

- Components:

- Multi-head attention mechanism

- Feed-forward neural networks

- Layer normalization

- Positional encoding

- Variations:

- Decoder-only (GPT-style)

- Encoder-decoder (T5-style)

- Modifications for efficiency

- Components:

- Scaling Considerations

- Hardware Requirements:

- Distributed training systems

- Memory optimization

- Parallel processing

- Architecture Choices:

- Layer count

- Hidden dimension size

- Attention head configuration

- Hardware Requirements:

Training Process

- Pre-training

- Data Preparation:

- Web text

- Books

- Code

- Scientific papers

- Objectives:

- Next token prediction

- Masked language modeling

- Multiple auxiliary tasks

- Data Preparation:

- Adaptation Methods

- Instruction Tuning:

- Natural language task descriptions

- Multi-task learning

- Task generalization

- RLHF:

- Human preference learning

- Safety alignment

- Behavior optimization

- Instruction Tuning:

Utilization Techniques

- Prompting Strategies

- Basic Prompting:

- Direct instructions

- Few-shot examples

- Zero-shot prompts

- Advanced Methods:

- Chain-of-thought

- Self-consistency

- Tool augmentation

- Basic Prompting:

- Application Patterns

- Task Types:

- Generation

- Classification

- Question answering

- Coding

- Integration Methods:

- API endpoints

- Model serving

- Application backends

- Task Types:

Major Milestones

ChatGPT (2022)

- Technical Achievements

- Advanced dialogue capabilities

- Robust safety measures

- Consistent persona

- Tool integration

- Impact

- Widespread adoption

- New application paradigms

- Industry transformation

- Public AI awareness

GPT-4 (2023)

- Key Advances

- Multimodal understanding

- Enhanced reliability

- Better reasoning

- Improved safety

- Technical Features

- Predictable scaling

- Vision capabilities

- Longer context window

- Advanced system prompting

Challenges and Future Directions

Current Challenges

- Computational Resources

- Training Costs:

- Massive energy requirements

- Expensive hardware needs

- Limited accessibility

- Infrastructure Needs:

- Specialized facilities

- Cooling systems

- Power management

- Training Costs:

- Data Requirements

- Quality Issues:

- Data cleaning

- Content filtering

- Bias mitigation

- Privacy Concerns:

- Personal information

- Copyright issues

- Regulatory compliance

- Quality Issues:

- Safety and Alignment

- Technical Challenges:

- Hallucination prevention

- Truthfulness

- Bias detection

- Ethical Considerations:

- Harm prevention

- Fairness

- Transparency

- Technical Challenges:

Future Directions

- Improved Efficiency

- Architecture Innovation:

- Sparse attention

- Parameter efficiency

- Memory optimization

- Training Methods:

- Better scaling laws

- Efficient fine-tuning

- Reduced compute needs

- Architecture Innovation:

- Enhanced Capabilities

- Multimodal Understanding:

- Vision-language integration

- Audio processing

- Sensor data interpretation

- Reasoning Abilities:

- Logical deduction

- Mathematical problem solving

- Scientific reasoning

- Multimodal Understanding:

- Safety Development

- Alignment Techniques:

- Value learning

- Preference optimization

- Safety bounds

- Evaluation Methods:

- Robustness testing

- Safety metrics

- Bias assessment

- Alignment Techniques:

Summary

- LLMs represent a fundamental shift in AI capabilities

- Scale and architecture drive emergent abilities

- Continuing rapid development in capabilities

- Balance between advancement and safety

- Growing impact on society and technology

- Need for responsible development and deployment

References and Further Reading

- Scaling Laws Papers

- Emergent Abilities Research

- Safety and Alignment Studies

- Technical Documentation

- Industry Reports

Paper Reading: A Survey of Large Language Models

Lecture 2: Understanding Tokenization in Language Models

Tokenization is a fundamental concept in Natural Language Processing (NLP) that involves breaking down text into smaller units called tokens. These tokens can be words, subwords, or characters, depending on the tokenization strategy used. The choice of tokenization method can significantly impact a model’s performance and its ability to handle various languages and vocabularies.

Common Tokenization Approaches

- Word-based Tokenization

- Splits text at word boundaries (usually spaces and punctuation)

- Simple and intuitive but struggles with out-of-vocabulary words

- Requires a large vocabulary to cover most words

- Examples: Early versions of BERT used WordPiece tokenization

- Character-based Tokenization

- Splits text into individual characters

- Very small vocabulary size

- Can handle any word but loses word-level meaning

- Typically results in longer sequences

- Subword Tokenization

- Breaks words into meaningful subunits

- Balances vocabulary size and semantic meaning

- Better handles rare words

- Popular methods include:

- Byte-Pair Encoding (BPE)

- WordPiece

- Unigram

- SentencePiece

Let’s dive deep into one of the most widely used subword tokenization methods: Byte-Pair Encoding (BPE).

Byte-Pair Encoding (BPE) Tokenization

Reference Tutorial: Byte-Pair Encoding tokenization

Byte-Pair Encoding (BPE) was initially developed as an algorithm to compress texts, and then used by OpenAI for tokenization when pretraining the GPT model. It’s used by many Transformer models, including GPT, GPT-2, Llama1, Llama2, Llama3, RoBERTa, BART, and DeBERTa.

Training Algorithm

BPE training starts by computing the unique set of words used in the corpus (after the normalization and pre-tokenization steps are completed), then building the vocabulary by taking all the symbols used to write those words. As a very simple example, let’s say our corpus uses these five words:

"hug", "pug", "pun", "bun", "hugs"

The base vocabulary will then be ["b", "g", "h", "n", "p", "s", "u"]. For real-world cases, that base vocabulary will contain all the ASCII characters, at the very least, and probably some Unicode characters as well. If an example you are tokenizing uses a character that is not in the training corpus, that character will be converted to the unknown token. That’s one reason why lots of NLP models are very bad at analyzing content with emojis.

The GPT-2 and RoBERTa tokenizers (which are pretty similar) have a clever way to deal with this: they don’t look at words as being written with Unicode characters, but with bytes. This way the base vocabulary has a small size (256), but every character you can think of will still be included and not end up being converted to the unknown token. This trick is called byte-level BPE.

After getting this base vocabulary, we add new tokens until the desired vocabulary size is reached by learning merges, which are rules to merge two elements of the existing vocabulary together into a new one. So, at the beginning these merges will create tokens with two characters, and then, as training progresses, longer subwords.

At any step during the tokenizer training, the BPE algorithm will search for the most frequent pair of existing tokens (by “pair,” here we mean two consecutive tokens in a word). That most frequent pair is the one that will be merged, and we rinse and repeat for the next step.

Going back to our previous example, let’s assume the words had the following frequencies:

("hug", 10), ("pug", 5), ("pun", 12), ("bun", 4), ("hugs", 5)

meaning “hug” was present 10 times in the corpus, “pug” 5 times, “pun” 12 times, “bun” 4 times, and “hugs” 5 times. We start the training by splitting each word into characters (the ones that form our initial vocabulary) so we can see each word as a list of tokens:

("h" "u" "g", 10), ("p" "u" "g", 5), ("p" "u" "n", 12), ("b" "u" "n", 4), ("h" "u" "g" "s", 5)

Then we look at pairs. The pair (“h”, “u”) is present in the words “hug” and “hugs”, so 15 times total in the corpus. It’s not the most frequent pair, though: that honor belongs to (“u”, “g”), which is present in “hug”, “pug”, and “hugs”, for a grand total of 20 times in the vocabulary.

Thus, the first merge rule learned by the tokenizer is (“u”, “g”) -> “ug”, which means that “ug” will be added to the vocabulary, and the pair should be merged in all the words of the corpus. At the end of this stage, the vocabulary and corpus look like this:

Vocabulary: ["b", "g", "h", "n", "p", "s", "u", "ug"]

Corpus: ("h" "ug", 10), ("p" "ug", 5), ("p" "u" "n", 12), ("b" "u" "n", 4), ("h" "ug" "s", 5)

Now we have some pairs that result in a token longer than two characters: the pair (“h”, “ug”), for instance (present 15 times in the corpus). The most frequent pair at this stage is (“u”, “n”), however, present 16 times in the corpus, so the second merge rule learned is (“u”, “n”) -> “un”. Adding that to the vocabulary and merging all existing occurrences leads us to:

Vocabulary: ["b", "g", "h", "n", "p", "s", "u", "ug", "un"]

Corpus: ("h" "ug", 10), ("p" "ug", 5), ("p" "un", 12), ("b" "un", 4), ("h" "ug" "s", 5)

Now the most frequent pair is (“h”, “ug”), so we learn the merge rule (“h”, “ug”) -> “hug”, which gives us our first three-letter token. After the merge, the corpus looks like this:

Vocabulary: ["b", "g", "h", "n", "p", "s", "u", "ug", "un", "hug"]

Corpus: ("hug", 10), ("p" "ug", 5), ("p" "un", 12), ("b" "un", 4), ("hug" "s", 5)

And we continue like this until we reach the desired vocabulary size.

Tokenization Inference

Tokenization inference follows the training process closely, in the sense that new inputs are tokenized by applying the following steps:

- Splitting the words into individual characters

- Applying the merge rules learned in order on those splits

Let’s take the example we used during training, with the three merge rules learned:

("u", "g") -> "ug"

("u", "n") -> "un"

("h", "ug") -> "hug"

The word “bug” will be tokenized as ["b", "ug"]. “mug”, however, will be tokenized as ["[UNK]", "ug"] since the letter “m” was not in the base vocabulary. Likewise, the word “thug” will be tokenized as ["[UNK]", "hug"]: the letter “t” is not in the base vocabulary, and applying the merge rules results first in “u” and “g” being merged and then “h” and “ug” being merged.

Implementing BPE

Now let’s take a look at an implementation of the BPE algorithm. This won’t be an optimized version you can actually use on a big corpus; we just want to show you the code so you can understand the algorithm a little bit better.

I present the colab link for you to reproduce this part’s experiments easily: Colab BPE

Training BPE

First we need a corpus, so let’s create a simple one with a few sentences:

corpus = [

"This is the Hugging Face Course.",

"This chapter is about tokenization.",

"This section shows several tokenizer algorithms.",

"Hopefully, you will be able to understand how they are trained and generate tokens."

]

Next, we need to pre-tokenize that corpus into words. Since we are replicating a BPE tokenizer (like GPT-2), we will use the gpt2 tokenizer for the pre-tokenization:

from transformers import AutoTokenizer

# init pre tokenize function

gpt2_tokenizer = AutoTokenizer.from_pretrained("gpt2")

pre_tokenize_function = gpt2_tokenizer.backend_tokenizer.pre_tokenizer.pre_tokenize_str

# pre tokenize

pre_tokenized_corpus = [pre_tokenize_function(text) for text in corpus]

We have the output

[

[('This', (0, 4)), ('Ġis', (4, 7)), ('Ġthe', (7, 11)), ('ĠHugging', (11, 19)), ('ĠFace', (19, 24)), ('ĠCourse', (24, 31)), ('.', (31, 32))],

[('This', (0, 4)), ('Ġchapter', (4, 12)), ('Ġis', (12, 15)), ('Ġabout', (15, 21)), ('Ġtokenization', (21, 34)), ('.', (34, 35))],

[('This', (0, 4)), ('Ġsection', (4, 12)), ('Ġshows', (12, 18)), ('Ġseveral', (18, 26)), ('Ġtokenizer', (26, 36)), ('Ġalgorithms', (36, 47)), ('.', (47, 48))],

[('Hopefully', (0, 9)), (',', (9, 10)), ('Ġyou', (10, 14)), ('Ġwill', (14, 19)), ('Ġbe', (19, 22)), ('Ġable', (22, 27)), ('Ġto', (27, 30)), ('Ġunderstand', (30, 41)), ('Ġhow', (41, 45)), ('Ġthey', (45, 50)), ('Ġare', (50, 54)), ('Ġtrained', (54, 62)), ('Ġand', (62, 66)), ('Ġgenerate', (66, 75)), ('Ġtokens', (75, 82)), ('.', (82, 83))]

]

Then we compute the frequencies of each word in the corpus as we do the pre-tokenization:

from collections import defaultdict

word2count = defaultdict(int)

for split_text in pre_tokenized_corpus:

for word, _ in split_text:

word2count[word] += 1

The obtained word2count is as follows:

defaultdict(<class 'int'>, {'This': 3, 'Ġis': 2, 'Ġthe': 1, 'ĠHugging': 1, 'ĠFace': 1, 'ĠCourse': 1, '.': 4, 'Ġchapter': 1, 'Ġabout': 1, 'Ġtokenization': 1, 'Ġsection': 1, 'Ġshows': 1, 'Ġseveral': 1, 'Ġtokenizer': 1, 'Ġalgorithms': 1, 'Hopefully': 1, ',': 1, 'Ġyou': 1, 'Ġwill': 1, 'Ġbe': 1, 'Ġable': 1, 'Ġto': 1, 'Ġunderstand': 1, 'Ġhow': 1, 'Ġthey': 1, 'Ġare': 1, 'Ġtrained': 1, 'Ġand': 1, 'Ġgenerate': 1, 'Ġtokens': 1})

The next step is to compute the base vocabulary, formed by all the characters used in the corpus:

vocab_set = set()

for word in word2count:

vocab_set.update(list(word))

vocabs = list(vocab_set)

The obtained base vocabulary is as follows:

['i', 't', 'p', 'o', 'r', 'm', 'e', ',', 'y', 'v', 'Ġ', 'F', 'a', 'C', 'H', '.', 'f', 'l', 'u', 'c', 'T', 'k', 'h', 'z', 'd', 'g', 'w', 'n', 's', 'b']

We now need to split each word into individual characters, to be able to start training:

word2splits = {word: [c for c in word] for word in word2count}

The output is:

'This': ['T', 'h', 'i', 's'],

'Ġis': ['Ġ', 'i', 's'],

'Ġthe': ['Ġ', 't', 'h', 'e'],

...

'Ġand': ['Ġ', 'a', 'n', 'd'],

'Ġgenerate': ['Ġ', 'g', 'e', 'n', 'e', 'r', 'a', 't', 'e'],

'Ġtokens': ['Ġ', 't', 'o', 'k', 'e', 'n', 's']

Now that we are ready for training, let’s write a function that computes the frequency of each pair. We’ll need to use this at each step of the training:

def _compute_pair2score(word2splits, word2count):

pair2count = defaultdict(int)

for word, word_count in word2count.items():

split = word2splits[word]

if len(split) == 1:

continue

for i in range(len(split) - 1):

pair = (split[i], split[i + 1])

pair2count[pair] += word_count

return pair2count

The output is

defaultdict(<class 'int'>, {('T', 'h'): 3, ('h', 'i'): 3, ('i', 's'): 5, ('Ġ', 'i'): 2, ('Ġ', 't'): 7, ('t', 'h'): 3, ..., ('n', 's'): 1})

Now, finding the most frequent pair only takes a quick loop:

def _compute_most_score_pair(pair2count):

best_pair = None

max_freq = None

for pair, freq in pair2count.items():

if max_freq is None or max_freq < freq:

best_pair = pair

max_freq = freq

return best_pair

After counting, the current pair with the highest frequency is: (‘Ġ’, ‘t’), occurring 7 times. We merge (‘Ġ’, ‘t’) into a single token and add it to the vocabulary. Simultaneously, we add the merge rule (‘Ġ’, ‘t’) to our list of merge rules.

merge_rules = []

best_pair = compute_most_score_pair(pair2score)

vocabs.append(best_pair[0] + best_pair[1])

merge_rules.append(best_pair)

Now the vocabulary is

['i', 't', 'p', 'o', 'r', 'm', 'e', ',', 'y', 'v', 'Ġ', 'F', 'a', 'C', 'H', '.', 'f', 'l', 'u', 'c', 'T', 'k', 'h', 'z', 'd', 'g', 'w', 'n', 's', 'b',

'Ġt']

Based on the updated vocabulary, we re-split word2count. For implementation, we can directly apply the new merge rule (‘Ġ’, ‘t’) to the existing word2split. This is more efficient than performing a complete re-split, as we only need to apply the latest merge rule to the existing splits.

def _merge_pair(a, b, word2splits):

new_word2splits = dict()

for word, split in word2splits.items():

if len(split) == 1:

new_word2splits[word] = split

continue

i = 0

while i < len(split) - 1:

if split[i] == a and split[i + 1] == b:

split = split[:i] + [a + b] + split[i + 2:]

else:

i += 1

new_word2splits[word] = split

return new_word2splits

The new word2split is

{'This': ['T', 'h', 'i', 's'],

'Ġis': ['Ġ', 'i', 's'],

'Ġthe': ['Ġt', 'h', 'e'],

'ĠHugging': ['Ġ', 'H', 'u', 'g', 'g', 'i', 'n', 'g'],

...

'Ġtokens': ['Ġt', 'o', 'k', 'e', 'n', 's']}

As we can see, the new word2split now contains the newly merged token “Ġt”. We repeat this iterative process until the vocabulary size reaches our predefined target size.

while len(vocabs) < vocab_size:

pair2score = compute_pair2score(word2splits, word2count)

best_pair = compute_most_score_pair(pair2score)

vocabs.append(best_pair[0] + best_pair[1])

merge_rules.append(best_pair)

word2splits = merge_pair(best_pair[0], best_pair[1], word2splits)

Let’s say our target vocabulary size is 50. After the above iterations, we obtain the following vocabulary and merge rules:

vocabs = ['i', 't', 'p', 'o', 'r', 'm', 'e', ',', 'y', 'v', 'Ġ', 'F', 'a', 'C', 'H', '.', 'f', 'l', 'u', 'c', 'T', 'k', 'h', 'z', 'd', 'g', 'w', 'n', 's', 'b', 'Ġt', 'is', 'er', 'Ġa', 'Ġto', 'en', 'Th', 'This', 'ou', 'se', 'Ġtok', 'Ġtoken', 'nd', 'Ġis', 'Ġth', 'Ġthe', 'in', 'Ġab', 'Ġtokeni', 'Ġtokeniz']

merge_rules = [('Ġ', 't'), ('i', 's'), ('e', 'r'), ('Ġ', 'a'), ('Ġt', 'o'), ('e', 'n'), ('T', 'h'), ('Th', 'is'), ('o', 'u'), ('s', 'e'), ('Ġto', 'k'), ('Ġtok', 'en'), ('n', 'd'), ('Ġ', 'is'), ('Ġt', 'h'), ('Ġth', 'e'), ('i', 'n'), ('Ġa', 'b'), ('Ġtoken', 'i'), ('Ġtokeni', 'z')]

Thus, we have completed the training of our BPE tokenizer based on the given corpus. This trained tokenizer, consisting of the vocabulary and merge rules, can now be used to tokenize new input text using the learned subword patterns.

BPE’s Inference

During the inference phase, given a sentence, we need to split it into a sequence of tokens. The implementation involves two main steps:

First, we pre-tokenize the sentence and split it into character-level sequences

Then, we apply the merge rules sequentially to form larger tokens

def tokenize(text):

# pre tokenize

words = [word for word, _ in pre_tokenize_str(text)]

# split into char level

splits = [[c for c in word] for word in words]

# apply merge rules

for merge_rule in merge_rules:

for index, split in enumerate(splits):

i = 0

while i < len(split) - 1:

if split[i] == merge_rule[0] and split[i + 1] == merge_rule[1]:

split = split[:i] + ["".join(merge_rule)] + split[i + 2:]

else:

i += 1

splits[index] = split

return sum(splits, [])

For example:

>>> tokenize("This is not a token.")

>>> ['This', 'Ġis', 'Ġ', 'n', 'o', 't', 'Ġa', 'Ġtoken', '.']

Question 1: Given the tokenizer introduced in Lecture 2, what is the tokenization result of the string “This is a token.”

Lecture 3: Transformer Architecture

Table of Contents

- Introduction

- Background

- General Transformer Architecture

- Attention Mechanism

- Position-Wise Feed-Forward Networks

- Training and Optimization

- Conclusion

Introduction

The Transformer model is a powerful deep learning architecture that has achieved groundbreaking results in various fields—most notably in Natural Language Processing (NLP), computer vision, and speech recognition—since it was introduced in Attention Is All You Need (Vaswani et al., 2017). Its core component is the self-attention mechanism, which efficiently handles long-range dependencies in sequences while allowing for extensive parallelization. Many subsequent models, such as BERT, GPT, Vision Transformer (ViT), and multimodal Transformers, are built upon this foundational structure.

Background

Before the Transformer, sequential modeling primarily relied on Recurrent Neural Networks (RNNs) or Convolutional Neural Networks (CNNs). These networks often struggled with capturing long-distance dependencies, parallelization, and computational efficiency. In contrast, the self-attention mechanism of Transformers captures global dependencies across input and output sequences simultaneously and offers excellent parallelization capabilities.

General Transformer Architecture

Modern Transformer architectures typically fall into one of three categories: encoder-decoder, encoder-only, or decoder-only, depending on the application scenario.

Encoder-Decoder Transformers

An encoder-decoder Transformer first encodes the input sequence into a contextual representation, then the decoder uses this encoded information to generate the target sequence. Typical applications include machine translation and text summarization. Models like T5 and MarianMT are representative of this structure.

Encoder-Only Transformers

Encoder-only models focus on learning bidirectional contextual representations of input sequences for classification, retrieval, and language understanding tasks. BERT and its variants (RoBERTa, ALBERT, etc.) belong to this category.

Decoder-Only Transformers

Decoder-only models generate outputs in an autoregressive manner, making them well-suited for text generation, dialogue systems, code generation, and more. GPT series, LLaMA, and PaLM are examples of this type.

Attention Mechanism

The core of the Transformer lies in its attention mechanism, which allows the model to focus on the most relevant parts of the input sequence given a query. Below, we detail the Scaled Dot-Product Attention and the Multi-Head Attention mechanisms.

What is Attention?

The attention mechanism describes a recent new group of layers in neural networks that has attracted a lot of interest in the past few years, especially in sequence tasks. There are a lot of different possible definitions of “attention” in the literature, but the one we will use here is the following: the attention mechanism describes a weighted average of (sequence) elements with the weights dynamically computed based on an input query and elements’ keys. So what does this exactly mean? The goal is to take an average over the features of multiple elements. However, instead of weighting each element equally, we want to weight them depending on their actual values. In other words, we want to dynamically decide on which inputs we want to “attend” more than others. In particular, an attention mechanism has usually four parts we need to specify:

- Query: The query is a feature vector that describes what we are looking for in the sequence, i.e. what would we maybe want to pay attention to.

- Keys: For each input element, we have a key which is again a feature vector. This feature vector roughly describes what the element is “offering”, or when it might be important. The keys should be designed such that we can identify the elements we want to pay attention to based on the query.

- Values: For each input element, we also have a value vector. This feature vector is the one we want to average over.

- Score function: To rate which elements we want to pay attention to, we need to specify a score function $f_{attn}$. The score function takes the query and a key as input, and output the score/attention weight of the query-key pair. It is usually implemented by simple similarity metrics like a dot product, or a small MLP.

The weights of the average are calculated by a softmax over all score function outputs. Hence, we assign those value vectors a higher weight whose corresponding key is most similar to the query. If we try to describe it with pseudo-math, we can write:

\[\alpha_i = \frac{\exp\left(f_{attn}\left(\text{key}_i, \text{query}\right)\right)}{\sum_j \exp\left(f_{attn}\left(\text{key}_j, \text{query}\right)\right)}, \hspace{5mm} \text{out} = \sum_i \alpha_i \cdot \text{value}_i\]Visually, we can show the attention over a sequence of words as follows:

Attention Example

For every word, we have one key and one value vector. The query is compared to all keys with a score function (in this case the dot product) to determine the weights. The softmax is not visualized for simplicity. Finally, the value vectors of all words are averaged using the attention weights.

Most attention mechanisms differ in terms of what queries they use, how the key and value vectors are defined, and what score function is used. The attention applied inside the Transformer architecture is called self-attention. In self-attention, each sequence element provides a key, value, and query. For each element, we perform an attention layer where based on its query, we check the similarity of the all sequence elements’ keys, and returned a different, averaged value vector for each element. We will now go into a bit more detail by first looking at the specific implementation of the attention mechanism which is in the Transformer case the scaled dot product attention.

Scaled Dot-Product Attention

Given a query matrix $Q$, key matrix $K$, and value matrix $V$, the attention formula is:

\[\text{Attention}(Q, K, V) = \text{softmax}\Bigl( \frac{QK^T}{\sqrt{d_k}} \Bigr)V\]where $d_k$ is the dimensionality of the key vectors (often the same as the query dimensionality). Every row of $Q$ corresponds a token’s embedding.

Example 1: Detailed Numerical Computation

Suppose we have the following matrices (small dimensions chosen for illustrative purposes):

\[Q = \begin{bmatrix} 1 & 0 \\ 0 & 1 \\ 1 & 1 \end{bmatrix}, \quad K = \begin{bmatrix} 1 & 1 \\ 0 & 1 \\ 1 & 0 \end{bmatrix}, \quad V = \begin{bmatrix} 0 & 2 \\ 1 & 1 \\ 2 & 0 \end{bmatrix}\]-

Compute $QK^T$

\[QK^T = \begin{bmatrix} 1 & 0 & 1 \\ 1 & 1 & 0 \\ 2 & 1 & 1 \end{bmatrix}\]

According to the example setup: -

Scale by $\sqrt{d_k}$

\[\frac{QK^T}{\sqrt{2}} \approx \begin{bmatrix} 0.71 & 0 & 0.71 \\ 0.71 & 0.71 & 0 \\ 1.41 & 0.71 & 0.71 \end{bmatrix}\]

Here, $d_k = 2$. Thus, $\sqrt{2} \approx 1.41$. So, -

Apply softmax row-wise

The softmax of a vector $x$ is given by \(\text{softmax}(x_i) = \frac{e^{x_i}}{\sum_j e^{x_j}}.\) Let’s calculate this row by row:- Row 1: $[0.71, 0, 0.71]$

- Calculate exponentials:

- $e^{0.71} \approx 2.034$ (for the 1st and 3rd elements)

- $e^{0} = 1$ (for the 2nd element)

- Sum of exponentials: $2.034 + 1 + 2.034 \approx 5.068$

- Softmax values:

- $\frac{2.034}{5.068} \approx 0.401$

- $\frac{1}{5.068} \approx 0.197$

- $\frac{2.034}{5.068} \approx 0.401$

- Final result: $[0.401, 0.197, 0.401]$ ≈ $[0.40, 0.20, 0.40]$

- Calculate exponentials:

- Row 2: $[0.71, 0.71, 0]$

- Calculate exponentials:

- $e^{0.71} \approx 2.034$ (for the 1st and 2nd elements)

- $e^{0} = 1$ (for the 3rd element)

- Sum of exponentials: $2.034 + 2.034 + 1 \approx 5.068$

- Softmax values:

- $\frac{2.034}{5.068} \approx 0.401$

- $\frac{2.034}{5.068} \approx 0.401$

- $\frac{1}{5.068} \approx 0.197$

- Final result: $[0.401, 0.401, 0.197]$ ≈ $[0.40, 0.40, 0.20]$

- Calculate exponentials:

- Row 3: $[1.41, 0.71, 0.71]$

- Calculate exponentials:

- $e^{1.41} \approx 4.096$

- $e^{0.71} \approx 2.034$ (for the 2nd and 3rd elements)

- Sum of exponentials: $4.096 + 2.034 + 2.034 \approx 8.164$

- Softmax values:

- $\frac{4.096}{8.164} \approx 0.501$

- $\frac{2.034}{8.164} \approx 0.249$

- $\frac{2.034}{8.164} \approx 0.249$

- Final result: $[0.501, 0.249, 0.249]$ ≈ $[0.50, 0.25, 0.25]$

- Calculate exponentials:

The final softmax matrix $\alpha$ is: \(\alpha = \begin{bmatrix} 0.40 & 0.20 & 0.40 \\ 0.40 & 0.40 & 0.20 \\ 0.50 & 0.25 & 0.25 \end{bmatrix}\)

Key observations about the softmax results:

- All output values are between 0 and 1

- Each row sums to 1

- Equal input values (Row 1) result in equal output probabilities

- Larger input values receive larger output probabilities (middle values in Rows 2 and 3)

(slight rounding applied).

- Row 1: $[0.71, 0, 0.71]$

-

Multiply by (V)

\(\text{Attention}(Q, K, V) = \alpha V.\)

-

Row 1 weights ([0.40, 0.20, 0.40]) on (V):

\[0.40 \times [0,2] + 0.20 \times [1,1] + 0.40 \times [2,0] = [0 + 0.20 + 0.80,\; 0.80 + 0.20 + 0] = [1.00,\; 1.00].\] -

Row 2 weights ([0.40, 0.40, 0.20]):

\[0.40 \times [0,2] + 0.40 \times [1,1] + 0.20 \times [2,0] = [0,\;0.80] + [0.40,\;0.40] + [0.40,\;0] = [0.80,\;1.20].\] -

Row 3 weights ([0.50, 0.25, 0.25]):

\[0.50 \times [0,2] + 0.25 \times [1,1] + 0.25 \times [2,0] = [0,\;1.0] + [0.25,\;0.25] + [0.50,\;0] = [0.75,\;1.25].\]

Final Output:

\[\begin{bmatrix} 1.00 & 1.00 \\ 0.80 & 1.20 \\ 0.75 & 1.25 \end{bmatrix}\](rounded values).

-

Example 2: Another Small-Dimension Example

Let us consider an even smaller example:

\[Q = \begin{bmatrix} 1 & 1 \end{bmatrix}, \quad K = \begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix}, \quad V = \begin{bmatrix} 2 & 3 \\ 4 & 1 \end{bmatrix}.\]Here, $Q$ is $1 \times 2$, $K$ is $2 \times 2$, and $V$ is $2 \times 2$.

-

Compute $QK^T$

\[QK^T = QK = \begin{bmatrix} 1 & 1 \end{bmatrix} \begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix} = \begin{bmatrix} 1 & 1 \end{bmatrix}.\]

Since $K$ is a square matrix, $K^T = K$: -

Scale by $\sqrt{d_k}$

\[\frac{[1,\;1]}{1.41} \approx [0.71,\;0.71].\]

$d_k = 2$. Thus, $\frac{1}{\sqrt{2}} \approx \frac{1}{1.41} \approx 0.71$. So -

Softmax

$[0.71, 0.71]$ has equal values, so the softmax is $[0.5, 0.5]$. -

Multiply by $V$

\[[0.5,\;0.5] \begin{bmatrix} 2 & 3 \\ 4 & 1 \end{bmatrix} = 0.5 \times [2,3] + 0.5 \times [4,1] = [1,1.5] + [2,0.5] = [3,2].\]

Final Output: $[3,\;2]$.

Example 3: Larger Q and K with V as a Column Vector

Let us consider an example where $Q$ and $K$ have a larger dimension, but $V$ has only one column:

\[Q = \begin{bmatrix} 1 & 1 & 1 & 1 \end{bmatrix}, \quad K = \begin{bmatrix} 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \end{bmatrix}, \quad V = \begin{bmatrix} 2 \\ 4 \\ 6 \\ 8 \end{bmatrix}.\]In-Course Question: Attention computation result of the above Q, K, V.

Multi-Head Attention

Multi-head attention projects $Q, K, V$ into multiple subspaces and performs several parallel scaled dot-product attentions (referred to as “heads”). These are concatenated, then transformed via a final linear projection:

\[\text{MultiHead}(Q, K, V) = \text{Concat}(\text{head}_1, \ldots, \text{head}_h) W^O,\]where each head is computed as:

\[\text{head}_i = \text{Attention}(Q W_i^Q, K W_i^K, V W_i^V).\]Below are multiple examples illustrating how multi-head attention calculations are performed, with increasingly detailed numeric demonstrations.

Example 1: Two-Head Attention Computation (Conceptual Illustration)

Let us assume we have a 2-head setup ($h = 2$), each head operating on half the dimension of $Q, K, V$. For instance, if the original dimension is 4, each head dimension could be 2.

-

Step 1: Linear transformations and splitting

\[Q W^Q \rightarrow [Q_1,\ Q_2], \quad K W^K \rightarrow [K_1,\ K_2], \quad V W^V \rightarrow [V_1,\ V_2].\]Here, $[Q_1,\ Q_2]$ means we split the transformed $Q$ along its last dimension into two sub-matrices (head 1 and head 2).

-

Step 2: Compute scaled dot-product attention for each head

\[\text{head}_1 = \text{Attention}(Q_1, K_1, V_1), \quad \text{head}_2 = \text{Attention}(Q_2, K_2, V_2).\]Suppose after computation:

\[\text{head}_1 = \begin{bmatrix} h_{11} & h_{12} \\ h_{21} & h_{22} \\ h_{31} & h_{32} \end{bmatrix}, \quad \text{head}_2 = \begin{bmatrix} g_{11} & g_{12} \\ g_{21} & g_{22} \\ g_{31} & g_{32} \end{bmatrix}.\] -

Step 3: Concatenate and apply final linear transform

\[\text{Concat}(\text{head}_1, \text{head}_2) = \begin{bmatrix} h_{11} & h_{12} & g_{11} & g_{12} \\ h_{21} & h_{22} & g_{21} & g_{22} \\ h_{31} & h_{32} & g_{31} & g_{32} \end{bmatrix}.\]

Concatenating the heads yields a $3 \times 4$ matrix (if each head is $3 \times 2$):We then multiply by $W^O$ (e.g., a $4 \times 4$ matrix) to get the final multi-head attention output.

Note: Actual numeric computation requires specifying all projection matrices $W_i^Q, W_i^K, W_i^V, W^O$ and the input $Q, K, V$. Below, we provide more concrete numeric examples.

Example 2: Two-Head Attention with Full Numerical Details

In this example, we will provide explicit numbers for a 2-head setup. We will assume each of $Q, K, V$ has shape $(3,4)$: there are 3 “tokens” (or time steps), each with a hidden size of 4. We split that hidden size into 2 heads, each with size 2.

Step 0: Define inputs and parameters

Let

We also define the projection matrices for the two heads. For simplicity, we assume each projection matrix has shape $(4,2)$ (since we project dimension 4 down to dimension 2), and $W^O$ will have shape $(4,4)$ to map the concatenated result $(3,4)$ back to $(3,4)$.

Let’s define:

\[W^Q_1 = \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix}, \quad W^K_1 = \begin{bmatrix} 1 & 0\\ 0 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix}, \quad W^V_1 = \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix},\] \[W^Q_2 = \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 1\\ 0 & 0 \end{bmatrix}, \quad W^K_2 = \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 0\\ 1 & 1 \end{bmatrix}, \quad W^V_2 = \begin{bmatrix} 0 & 1\\ 1 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix}.\]And let:

\[W^O = \begin{bmatrix} 1 & 0 & 0 & 1\\ 0 & 1 & 1 & 0\\ 1 & 0 & 1 & 0\\ 0 & 1 & 0 & 1 \end{bmatrix}.\]We will go step by step.

Step 1: Compute $Q_1, K_1, V_1$ for Head 1

\[Q_1 = Q \times W^Q_1,\quad K_1 = K \times W^K_1,\quad V_1 = V \times W^V_1.\]-

$Q_1 = Q W^Q_1$.

\[Q = \begin{bmatrix} 1 & 2 & 1 & 0\\ 0 & 1 & 1 & 1\\ 1 & 0 & 2 & 1 \end{bmatrix}, \quad W^Q_1 = \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix}.\]

Each row of $Q$ is multiplied by $W^Q_1$:-

Row 1 of $Q$: $[1,2,1,0]$

\[[1,2,1,0] \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix} = [1*1 + 2*0 + 1*1 + 0*0,\; 1*0 + 2*1 + 1*0 + 0*1] = [2,\;2].\] -

Row 2: $[0,1,1,1]$

\[[0,1,1,1] \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix} = [1,\;2].\] -

Row 3: $[1,0,2,1]$

\[[1,0,2,1] \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix} = [3,\;1].\]

Thus,

\[Q_1 = \begin{bmatrix} 2 & 2\\ 1 & 2\\ 3 & 1 \end{bmatrix}.\] -

-

$K_1 = K W^K_1$.

\[K = \begin{bmatrix} 1 & 1 & 0 & 2\\ 2 & 1 & 1 & 0\\ 0 & 1 & 1 & 1 \end{bmatrix},\quad W^K_1 = \begin{bmatrix} 1 & 0\\ 0 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix}.\]-

Row 1: $[1,1,0,2]$

\[[1,1,0,2] \times \begin{bmatrix} 1 & 0\\ 0 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix} = [3,\;1].\] -

Row 2: $[2,1,1,0]$

\[[2,1,1,0] \times \begin{bmatrix} 1 & 0\\ 0 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix} = [2,\;2].\] -

Row 3: $[0,1,1,1]$

\[[0,1,1,1] \times \begin{bmatrix} 1 & 0\\ 0 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix} = [1,\;2].\]

So,

\[K_1 = \begin{bmatrix} 3 & 1\\ 2 & 2\\ 1 & 2 \end{bmatrix}.\] -

-

$V_1 = V W^V_1$.

\[V = \begin{bmatrix} 1 & 1 & 0 & 0\\ 0 & 2 & 1 & 1\\ 1 & 1 & 2 & 2 \end{bmatrix},\quad W^V_1 = \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix}.\]-

Row 1: $[1,1,0,0]$

\[[1,1,0,0] \times \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix} = [1,\;1].\] -

Row 2: $[0,2,1,1]$

\[[0,2,1,1] \times \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix} = [1,\;3].\] -

Row 3: $[1,1,2,2]$

\[[1,1,2,2] \times \begin{bmatrix} 1 & 0\\ 0 & 1\\ 1 & 0\\ 0 & 1 \end{bmatrix} = [3,\;3].\]

Therefore,

\[V_1 = \begin{bmatrix} 1 & 1\\ 1 & 3\\ 3 & 3 \end{bmatrix}.\] -

Step 2: Compute $Q_2, K_2, V_2$ for Head 2

\[Q_2 = Q \times W^Q_2,\quad K_2 = K \times W^K_2,\quad V_2 = V \times W^V_2.\]-

$Q_2 = Q W^Q_2$:

\[W^Q_2 = \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 1\\ 0 & 0 \end{bmatrix}.\]-

Row 1 $[1,2,1,0]$:

\[[1,2,1,0] \times \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 1\\ 0 & 0 \end{bmatrix} = [3,\;2].\] -

Row 2 $[0,1,1,1]$:

\[[0,1,1,1] \times \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 1\\ 0 & 0 \end{bmatrix} = [2,\;1].\] -

Row 3 $[1,0,2,1]$:

\[[1,0,2,1] \times \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 1\\ 0 & 0 \end{bmatrix} = [2,\;3].\]

Hence,

\[Q_2 = \begin{bmatrix} 3 & 2\\ 2 & 1\\ 2 & 3 \end{bmatrix}.\] -

-

$K_2 = K W^K_2$:

\[W^K_2 = \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 0\\ 1 & 1 \end{bmatrix}.\]-

Row 1 $[1,1,0,2]$:

\[[1,1,0,2] \times \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 0\\ 1 & 1 \end{bmatrix} = [3,\;3].\] -

Row 2 $[2,1,1,0]$:

\[[2,1,1,0] \times \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 0\\ 1 & 1 \end{bmatrix} = [2,\;2].\] -

Row 3 $[0,1,1,1]$:

\[[0,1,1,1] \times \begin{bmatrix} 0 & 1\\ 1 & 0\\ 1 & 0\\ 1 & 1 \end{bmatrix} = [3,\;1].\]

So,

\[K_2 = \begin{bmatrix} 3 & 3\\ 2 & 2\\ 3 & 1 \end{bmatrix}.\] -

-

$V_2 = V W^V_2$:

\[W^V_2 = \begin{bmatrix} 0 & 1\\ 1 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix}.\]-

Row 1 $[1,1,0,0]$:

\[[1,1,0,0] \times \begin{bmatrix} 0 & 1\\ 1 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix} = [1,\;2].\] -

Row 2 $[0,2,1,1]$:

\[[0,2,1,1] \times \begin{bmatrix} 0 & 1\\ 1 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix} = [3,\;3].\] -

Row 3 $[1,1,2,2]$:

\[[1,1,2,2] \times \begin{bmatrix} 0 & 1\\ 1 & 1\\ 0 & 1\\ 1 & 0 \end{bmatrix} = [3,\;4].\]

Thus,

\[V_2 = \begin{bmatrix} 1 & 2\\ 3 & 3\\ 3 & 4 \end{bmatrix}.\] -

Step 3: Compute each head’s Scaled Dot-Product Attention

We now have for head 1:

\[Q_1 = \begin{bmatrix}2 & 2\\1 & 2\\3 & 1\end{bmatrix},\; K_1 = \begin{bmatrix}3 & 1\\2 & 2\\1 & 2\end{bmatrix},\; V_1 = \begin{bmatrix}1 & 1\\1 & 3\\3 & 3\end{bmatrix}.\]Similarly for head 2:

\[Q_2 = \begin{bmatrix}3 & 2\\2 & 1\\2 & 3\end{bmatrix},\; K_2 = \begin{bmatrix}3 & 3\\2 & 2\\3 & 1\end{bmatrix},\; V_2 = \begin{bmatrix}1 & 2\\3 & 3\\3 & 4\end{bmatrix}.\]Assume each key vector dimension is $d_k = 2$. Hence the scale is $\frac{1}{\sqrt{2}} \approx 0.707$.

- Head 1:

-

$Q_1 K_1^T$.

$K_1^T$ is

\[\begin{bmatrix} 3 & 2 & 1\\ 1 & 2 & 2 \end{bmatrix}.\] \[Q_1 K_1^T = \begin{bmatrix} 2 & 2\\ 1 & 2\\ 3 & 1 \end{bmatrix} \times \begin{bmatrix} 3 & 2 & 1\\ 1 & 2 & 2 \end{bmatrix} = \begin{bmatrix} 8 & 8 & 6\\ 5 & 6 & 5\\ 10 & 8 & 5 \end{bmatrix}.\] -

Scale: $\frac{Q_1 K_1^T}{\sqrt{2}}$:

\[\approx \begin{bmatrix} 5.66 & 5.66 & 4.24\\ 3.54 & 4.24 & 3.54\\ 7.07 & 5.66 & 3.54 \end{bmatrix}.\] -

Apply softmax row-wise (approx results after exponentiation and normalization):

\[\alpha_1 \approx \begin{bmatrix} 0.45 & 0.45 & 0.11\\ 0.25 & 0.50 & 0.25\\ 0.79 & 0.19 & 0.02 \end{bmatrix}.\] -

Multiply by $V_1$:

\[\text{head}_1 = \alpha_1 \times V_1.\]Approximating:

\[\text{head}_1 \approx \begin{bmatrix} 1.23 & 2.13\\ 1.50 & 2.50\\ 1.04 & 1.42 \end{bmatrix}.\]

-

- Head 2:

-

$Q_2 K_2^T$.

\[Q_2 = \begin{bmatrix} 3 & 2\\ 2 & 1\\ 2 & 3 \end{bmatrix},\quad K_2 = \begin{bmatrix} 3 & 3\\ 2 & 2\\ 3 & 1 \end{bmatrix}.\]Then

\[K_2^T = \begin{bmatrix} 3 & 2 & 3\\ 3 & 2 & 1 \end{bmatrix}.\] \[Q_2 K_2^T = \begin{bmatrix} 15 & 10 & 11\\ 9 & 6 & 7\\ 15 & 10 & 9 \end{bmatrix}.\] -

Scale: multiply by $1/\sqrt{2} \approx 0.707$:

\[\approx \begin{bmatrix} 10.61 & 7.07 & 7.78\\ 6.36 & 4.24 & 4.95\\ 10.61 & 7.07 & 6.36 \end{bmatrix}.\] -

Softmax row-wise (approx):

\[\alpha_2 \approx \begin{bmatrix} 0.92 & 0.03 & 0.05\\ 0.73 & 0.09 & 0.18\\ 0.96 & 0.03 & 0.01 \end{bmatrix}.\] -

Multiply by $V_2$:

\[V_2 = \begin{bmatrix} 1 & 2\\ 3 & 3\\ 3 & 4 \end{bmatrix}.\]Approximating:

\[\text{head}_2 \approx \begin{bmatrix} 1.16 & 2.13\\ 1.53 & 2.45\\ 1.09 & 2.06 \end{bmatrix}.\]

-

Step 4: Concatenate and apply $W^O$

We now concatenate $\text{head}_1$ and $\text{head}_2$ horizontally to form a $(3 \times 4)$ matrix:

Finally, multiply by $W^O$ $(4 \times 4)$:

\[\text{Output} = (\text{Concat}(\text{head}_1, \text{head}_2)) \times W^O.\]Where

\[W^O = \begin{bmatrix} 1 & 0 & 0 & 1\\ 0 & 1 & 1 & 0\\ 1 & 0 & 1 & 0\\ 0 & 1 & 0 & 1 \end{bmatrix}.\]We can do a row-by-row multiplication to get the final multi-head attention output (details omitted for brevity).

Example 3: Three-Head Attention with Another Set of Numbers (Short Demonstration)

For completeness, suppose we wanted $h=3$ heads, each of dimension $\frac{d_{\text{model}}}{3}$. The steps are exactly the same:

- Project $Q, K, V$ into three subspaces via $W^Q_i, W^K_i, W^V_i$.

- Perform scaled dot-product attention for each head:

$\text{head}_i = \text{Attention}(Q_i, K_i, V_i)$. - Concatenate all heads: $\text{Concat}(\text{head}_1, \text{head}_2, \text{head}_3)$.

- Multiply by $W^O$.

Each numeric calculation is analogous to the 2-head case—just with different shapes (e.g., each head might have dimension 4/3 if the original dimension is 4, which typically would be handled with rounding or a slightly different total dimension). The procedure remains identical in principle.

Position-Wise Feed-Forward Networks

Each layer in a Transformer includes a position-wise feed-forward network (FFN) that applies a linear transformation and activation to each position independently:

\[\text{FFN}(x) = \max(0,\; xW_1 + b_1)\, W_2 + b_2,\]where $\max(0, \cdot)$ is the ReLU activation function.

Example: Numerical Computation of the Feed-Forward Network

Let

\[x = \begin{bmatrix} 1 & 0 \\ 0 & 1 \\ 1 & 1 \end{bmatrix},\quad W_1 = \begin{bmatrix} 1 & 1 \\ 0 & 1 \end{bmatrix},\quad b_1 = \begin{bmatrix} 0 & 1 \end{bmatrix},\quad W_2 = \begin{bmatrix} 1 & 0 \\ 2 & 1 \end{bmatrix},\quad b_2 = \begin{bmatrix} 1 & -1 \end{bmatrix}.\]- Compute $xW_1 + b_1$

-

Row 1: $[1, 0]$

\[[1, 0] \begin{bmatrix} 1 & 1 \\ 0 & 1 \end{bmatrix} = [1, 1],\]then add $[0, 1]$ to get $[1, 2]$.

-

Row 2: $[0, 1]$

\[[0,1]\times \begin{bmatrix}1 & 1\\0 & 1\end{bmatrix} = [0, 1],\]plus $[0, 1]$ = $[0, 2]$.

-

Row 3: $[1,1]$

\[[1,1]\times \begin{bmatrix}1 & 1\\0 & 1\end{bmatrix} = [1, 2],\]plus $[0, 1]$ = $[1, 3]$.

So

\[X_1 = \begin{bmatrix} 1 & 2\\ 0 & 2\\ 1 & 3 \end{bmatrix}.\] -

-

ReLU activation

\[\text{ReLU}(X_1) = X_1.\]

$\max(0, X_1)$ leaves nonnegative elements unchanged. All entries are already $\ge0$, so -

Multiply by $W_2$ and add $b_2$

\[W_2 = \begin{bmatrix} 1 & 0\\ 2 & 1 \end{bmatrix},\quad b_2 = [1, -1].\] \[X_2 = X_1 W_2.\]-

Row 1 of $X_1$: $[1,2]$

\([1,2] \begin{bmatrix} 1\\2 \end{bmatrix} = 1*1 +2*2=5, \quad [1,2] \begin{bmatrix} 0\\1 \end{bmatrix} = 0 +2=2.\) So $[5,2]$.

-

Row 2: $[0,2]$

\[[0,2] \begin{bmatrix}1\\2\end{bmatrix}=4,\quad [0,2] \begin{bmatrix}0\\1\end{bmatrix}=2.\] -

Row 3: $[1,3]$

\[[1,3]\begin{bmatrix}1\\2\end{bmatrix}=1+6=7,\quad [1,3]\begin{bmatrix}0\\1\end{bmatrix}=0+3=3.\]

Thus

\[X_2 = \begin{bmatrix} 5 & 2\\ 4 & 2\\ 7 & 3 \end{bmatrix}.\]Add $b_2=[1,-1]$:

\[X_2 + b_2 = \begin{bmatrix} 6 & 1\\ 5 & 1\\ 8 & 2 \end{bmatrix}.\] -

Final Output:

\[\begin{bmatrix} 6 & 1\\ 5 & 1\\ 8 & 2 \end{bmatrix}.\]Training and Optimization

Optimizer and Learning Rate Scheduling

Transformers commonly use Adam or AdamW, combined with a piecewise learning rate scheduling strategy:

\[l_{\text{rate}} = d_{\text{model}}^{-0.5} \cdot \min\bigl(\text{step}_\text{num}^{-0.5},\; \text{step}_\text{num}\times \text{warmup}_\text{steps}^{-1.5}\bigr),\]where:

- $d_{\text{model}}$ is the hidden dimension.

- $\text{step}_\text{num}$ is the current training step.

- $\text{warmup}_\text{steps}$ is the number of warmup steps.

Conclusion

The Transformer architecture has become a foundational model in modern deep learning, showing remarkable performance in NLP, computer vision, and multimodal applications. Its ability to capture long-range dependencies, combined with high parallelizability and scalability, has inspired a diverse range of research directions and practical systems. Ongoing work continues to explore ways to improve Transformer efficiency, adapt it to new scenarios, and enhance model interpretability.

Paper Reading: Attention Is All You Need

Below is a paragraph-by-paragraph (or subsection-by-subsection) markdown file that first re-states (“recaps”) each portion of the paper Attention Is All You Need and then comments on or explains that portion in more detail. Each header corresponds to a main section or subsection from the original text. The original content has been paraphrased and condensed to be more concise, but the overall structure and meaning are preserved.

Note: The original paper, “Attention Is All You Need,” was published by Ashish Vaswani et al. This markdown document is for educational purposes, offering an English re-statement of each section followed by commentary.

Paper Reading: Attention Is All You Need

Authors and Affiliations

Original (Condensed)

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin.

Affiliations: Google Brain, Google Research, University of Toronto.

Recap

A group of researchers from Google Brain, Google Research, and the University of Toronto propose a new network architecture that relies solely on attention mechanisms for sequence transduction tasks such as machine translation.

Commentary

This highlights that multiple authors, each potentially focusing on different aspects—model design, optimization, and experiments—came together to create what is now often referred to as the “Transformer” architecture.

Abstract

Original (Condensed)

The dominant sequence transduction models use recurrent or convolutional neural networks (often with attention). This paper proposes the Transformer, which is based entirely on attention mechanisms. It does away with recurrence and convolutions entirely. Experiments on two machine translation tasks show the model is both high-performing in terms of BLEU score and more parallelizable. The paper reports a new state-of-the-art BLEU on WMT 2014 English-German (28.4) and a strong single-model result on English-French (41.8), trained much faster than previous approaches. The Transformer also generalizes well to other tasks, e.g., English constituency parsing.*

Recap

The paper’s abstract introduces a novel approach called the Transformer. It uses only attention (no RNNs or CNNs) for tasks like machine translation and shows exceptional speed and accuracy results.

Commentary

This is a seminal innovation in deep learning for language processing. Removing recurrence (like LSTM layers) and convolutions makes training highly parallelizable, dramatically reducing training time. At the same time, it achieves superior or comparable performance on well-known benchmarks. The abstract also hints that the Transformer concept could generalize to other sequential or structured tasks.

1 Introduction

Original (Condensed)

Recurrent neural networks (RNNs), particularly LSTM or GRU models, have set the standard in sequence modeling and transduction tasks. However, they process input sequentially, limiting parallelization. Attention mechanisms have improved performance in tasks like translation, but they have traditionally been used on top of recurrent networks. This paper proposes a model that relies entirely on attention—called the Transformer—removing the need for recurrence or convolutional architectures. The result is a model that learns global dependencies and can be trained more efficiently.*

Recap

The introduction situates the proposed Transformer within the history of neural sequence modeling: first purely recurrent approaches, then RNN+attention, and finally a pure-attention approach. The authors observe that while recurrent models handle sequences effectively, they rely on step-by-step processing. This strongly limits parallel computation. The Transformer’s innovation is to dispense with recurrences altogether.

Commentary

The introduction highlights a major bottleneck in typical RNN-based models: the inability to parallelize across time steps in a straightforward way. Traditional attention over RNN outputs is still useful, but the authors propose a more radical approach, removing recurrences and using attention everywhere. This sets the stage for a highly parallelizable model that can scale better to longer sequences, given sufficient memory and computational resources.

In-Course Question 1: What is the number of dimensionality of the transformer’s query embeddings designed in this paper.

2 Background

Original (Condensed)

Efforts to reduce the sequential computation have led to alternatives like the Extended Neural GPU, ByteNet, and ConvS2S, which use convolutional networks for sequence transduction. However, even with convolution, the distance between two positions can be large in deep stacks, potentially making it harder to learn long-range dependencies. Attention mechanisms have been used for focusing on specific positions in a sequence, but typically in conjunction with RNNs. The Transformer is the first purely attention-based model for transduction.*

Recap

The background section covers attempts to speed up sequence modeling, including convolution-based architectures. While they improve speed and are more parallelizable than RNNs, they still can have challenges with long-range dependencies. Attention can address such dependencies, but before this paper, it was usually combined with recurrent models.

Commentary

This background motivates why researchers might try to eliminate recurrence and convolution entirely. If attention alone can handle dependency modeling, then the path length between any two positions in a sequence is effectively shorter. This suggests simpler, faster training and potentially better performance.

3 Model Architecture

The Transformer follows an encoder-decoder structure, but with self-attention replacing recurrences or convolutions.

3.1 Encoder and Decoder Stacks

Original (Condensed)

The encoder is composed of N identical layers; each layer has (1) a multi-head self-attention sub-layer, and (2) a position-wise feed-forward network. A residual connection is employed around each of these, followed by layer normalization. The decoder also has N identical layers with an additional sub-layer for attention over the encoder output. A masking scheme ensures each position in the decoder can only attend to positions before it (causal masking).*

Recap

- Encoder: Stack of N layers. Each layer has:

- Self-attention

- Feed-forward

Plus skip (residual) connections and layer normalization.

- Decoder: Similar stack but also attends to the encoder output. Additionally, the decoder masks future positions to preserve the autoregressive property.

Commentary

This design is highly modular: each layer is built around multi-head attention and a feed-forward block. The skip connections help with training stability, and layer normalization is known to speed up convergence. The causal masking in the decoder is crucial for generation tasks such as translation, ensuring that the model cannot “peek” at future tokens.

3.2 Attention

Original (Condensed)

An attention function maps a query and a set of key-value pairs to an output. We use a “Scaled Dot-Product Attention,” where the dot products between query and key vectors are scaled by the square root of the dimension. A softmax yields weights for each value. We also introduce multi-head attention: queries, keys, and values are linearly projected h times, each head performing attention in parallel, then combined.*

Recap

- Scaled Dot-Product Attention: Computes attention weights via

softmax((QK^T) / sqrt(d_k)) * V. - Multi-Head Attention: Instead of a single attention, we project Q, K, V into multiple sub-spaces (heads), do attention in parallel, then concatenate.

Commentary

Dot-product attention is computationally efficient and can be parallelized easily. The scaling factor 1/√(d_k) helps mitigate large magnitude dot products when the dimensionality of keys/queries is big. Multiple heads allow the model to look at different positions/relationships simultaneously, which helps capture various types of information (e.g., syntax, semantics).

3.3 Position-wise Feed-Forward Networks

Original (Condensed)

Each layer in the encoder and decoder has a feed-forward network that is applied to each position separately and identically, consisting of two linear transformations with a ReLU in between.*

Recap

After multi-head attention, each token’s representation goes through a small “fully connected” or “feed-forward” sub-network. This is done independently per position.

Commentary

This structure ensures that after attention-based mixing, each position is then transformed in a non-linear way. It is reminiscent of using small per-position multi-layer perceptrons to refine each embedding.

3.4 Embeddings and Softmax

Original (Condensed)

Token embeddings and the final output linear transformation share the same weight matrix (with a scaling factor). The model uses learned embeddings to convert input and output tokens to vectors of dimension d_model.*

Recap

The model uses standard embedding layers for tokens and ties the same weights in both the embedding and the pre-softmax projection. This helps with parameter efficiency and sometimes improves performance.

Commentary

Weight tying is a known trick that can save on parameters and can help the embedding space align with the output space in generative tasks.

3.5 Positional Encoding

Original (Condensed)

Because there is no recurrence or convolution, the Transformer needs positional information. The paper adds a sinusoidal positional encoding to the input embeddings, allowing the model to attend to relative positions. Learned positional embeddings perform similarly, but sinusoidal encodings might let the model generalize to sequence lengths not seen during training.*

Recap

The Transformer adds sine/cosine signals of varying frequencies to the embeddings so that each position has a unique pattern. This is essential to preserve ordering information.

Commentary

Without positional encodings, the self-attention mechanism would treat input tokens as an unstructured set. Positional information ensures that the model knows how tokens relate to one another in a sequence.

4 Why Self-Attention

Original (Condensed)

The authors compare self-attention to recurrent and convolutional layers in terms of computation cost and how quickly signals can travel between distant positions in a sequence. Self-attention is more parallelizable and has O(1) maximum path length (all tokens can attend to all others in one step). Convolutions and recurrences require multiple steps to connect distant positions. This can help with learning long-range dependencies.*

Recap

Self-attention:

- Parallelizable across sequence positions.

- Constant number of sequential operations per layer.

- Short paths between positions -> easier to learn long-range dependencies.

Commentary

The authors argue that self-attention layers are efficient (especially when sequence length is not extremely large) and effective at modeling dependencies. This is a key motivation for the entire design.

In-class question: What is the probability assigned to the ground-truth class in the ground-truth distribution after label smoothing when training the Transformer in the default setting of this paper?

5 Training

5.1 Training Data and Batching

Original (Condensed)

The authors use WMT 2014 English-German (about 4.5M sentence pairs) and English-French (36M pairs). They use subword tokenization (byte-pair encoding or word-piece) to handle large vocabularies. Training batches contain roughly 25k source and 25k target tokens.*

Recap

They describe the datasets and how the text is batched using subword units. This avoids issues with out-of-vocabulary tokens.

Commentary

Subword tokenization was pivotal in neural MT systems because it handles rare words well. Batching by approximate length helps the model train more efficiently and speeds up training on GPUs.

5.2 Hardware and Schedule

Original (Condensed)

They trained on a single machine with 8 NVIDIA P100 GPUs. The base model was trained for 100k steps (about 12 hours), while the bigger model took around 3.5 days. Each training step for the base model took ~0.4 seconds on this setup.*

Recap

Base models train surprisingly quickly—only about half a day for high-quality results. The big model uses more parameters and trains longer.

Commentary

This training time is significantly shorter than earlier neural MT models, demonstrating one practical advantage of a highly parallelizable architecture.

5.3 Optimizer

Original (Condensed)

The paper uses the Adam optimizer with specific hyperparameters (β1=0.9, β2=0.98, ε=1e-9). The learning rate increases linearly for the first 4k steps, then decreases proportionally to step^-0.5.*

Recap

A custom learning-rate schedule is used, with a “warm-up” phase followed by a decay. This is crucial to stabilize training early on and then adapt to a more standard rate.

Commentary

This “Noam” learning rate schedule (as often called) is well-known in the community. It boosts the learning rate once the model is more confident, yet prevents divergence early on.

5.4 Regularization

Original (Condensed)

Three types of regularization: (1) Dropout after sub-layers and on embeddings, (2) label smoothing of 0.1, (3) early stopping / checkpoint averaging (not explicitly described here but implied). Label smoothing slightly hurts perplexity but improves translation BLEU.*

Recap

- Dropout helps avoid overfitting.

- Label smoothing makes the model less certain about each token prediction, improving generalization.

Commentary

By forcing the model to distribute probability mass across different tokens, label smoothing can prevent the network from becoming overly confident in a small set of predictions, thus improving real-world performance metrics like BLEU.

6 Results

6.1 Machine Translation

Original (Condensed)

On WMT 2014 English-German, the big Transformer achieved 28.4 BLEU, surpassing all previously reported results (including ensembles). On English-French, it got 41.8 BLEU with much less training cost compared to other models. The base model also outperforms previous single-model baselines.*

Recap

Transformer sets a new SOTA on English-German and matches/exceeds on English-French with vastly reduced training time.

Commentary

This was a landmark result, as both speed and quality improved. The authors highlight not just the performance, but the “cost” in terms of floating-point operations, showing how the Transformer is more efficient.

6.2 Model Variations

Original (Condensed)

They explore different hyperparameters, e.g., number of attention heads, dimension of queries/keys, feed-forward layer size, and dropout. They find that more heads can help but too many heads can degrade performance. Bigger dimensions improve results at the expense of more computation.*

Recap

Experiments confirm that the Transformer’s performance scales with model capacity. Properly tuned dropout is vital. Both sinusoidal and learned positional embeddings perform comparably.

Commentary

This section is valuable for practitioners, as it provides insight into how to adjust model size and regularization. It also confirms that the approach is flexible.

6.3 English Constituency Parsing

Original (Condensed)

They show that the Transformer can also tackle English constituency parsing, performing competitively with top models. On the WSJ dataset, it achieves strong results, and in a semi-supervised setting, it is even more impressive.*

Recap

It isn’t just about machine translation: the model generalizes to other tasks with structural dependencies, illustrating self-attention’s adaptability.

Commentary

Constituency parsing requires modeling hierarchical relationships in sentences. Transformer’s ability to attend to any part of the input helps capture these structures without specialized RNNs or grammar-based methods.

7 Conclusion

Original (Condensed)